Apple can lead AIs to knowledge, but it cannot make them think

Some thoughts on a recent research paper

I’ve been making it a point to ramp up my engagement with generative AI (GenAI) this year. It seems very likely at this point to be a defining technology of this decade.

My go-to way to learn about what’s up and coming in deep tech fields is to read papers (often on Arxiv, but really wherever I can find them). Late last week, I saw on X that Apple released a paper about a path that Large Language Model (LLM) development has taken recently.

It seemed to make waves on X among technical subject matter experts across institutions. That means it’s worth paying attention to as an investor, because if the results are replicable, they’re going to impact the VC-backable AI landscape.

The Paper

In “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity”, Parshin Shojaee et al consider the development trajectory of Large Reasoning Models (LRMs). The paper evaluates some of the GenAI tools that have become very popular among consumer users of GenAI tools, such as OpenAI’s o1/o3, DeepSeek-R1, Claude 3.7 Sonnet, and Google’s Gemini.

The authors study how LRMs think by exposing them to problems of varying complexity. This is different from the standard approach of giving the models math problems, and well justified by the authors. Some of the less obscure problems include The Tower of Hanoi and a sort of one-dimensional Checkers.

The paper accomplishes three things.

First, it shows that LRMs can’t develop what the authors call “generalizable problem-solving capabilities for planning tasks”, and the authors explore this what this means in some depth. This is particularly striking in the Tower of Hanoi, because it’s commonly used in data structures courses to teach recursion, which I think of as a generalized solution (since the underlying math is a proof by induction, recursion works for towers of all sizes). The next level here is that the authors see LRM performance falls off past a certain point in complexity.

Second, it compares LLM and LRM performance across problems of varying complexity.

Finally, it evaluates LRM intermediate reasoning as LRMs solve problems of varying complexity.

The conclusions are the identification of a different heuristic to compare AI tools, identification several limits of LRMs, and the raising of questions about how to measure AI performance.

It is not the first piece of writing that I’d recommend to somebody looking to learn about the state of the art in AI, but I found it accessible as a non-expert reader with some background in computer science.

If you invest in startups, like my writing, and want to chat about possible career opportunities, don’t hesitate to be in touch. The best way to get ahold of me is via email:

The incentives

The incentives for the individual authors are quite clear — publishing helps other scientists take them more seriously as individual researchers, validates the rigor of the experimental process, advances their career, and pushes forward the state of the art in their field.

The much more interesting conversation is about the incentives for Apple, listed right below the authors. Apple wants to be a place where great research happens, because that helps it attract world-class talent for which it is known. That’s the top-level incentive for the firm here.

But the fact of the matter is that Apple hasn’t been doing so hot recently in its AI R&D outcomes.

Apple’s longest-lasting AI product to date is Siri, the voice AI assistant Apple acquired in 2014 and promptly integrated into iOS. While it’s a natural language interface, it seems to use machine learning rather than GenAI as the underlying technology.

GenAI has begun to play a bigger role in Apple’s plans for the future; it announced Apple Intelligence in 2024. Apple Intelligence is an on-device model and cloud model with LLMs, and provides a number of additional functions to equipped devices. These include proofreading, image generation, mail prioritization, photo search, notifications, and an overhaul to Siri.

But in March 2025, Apple delayed its more powerful AI-enabled Siri.

This past week, at Apple’s Worldwide Developers Conference (WWDC), the news wasn’t all that much better. Apple announced that it’s creating a variety of tools to let developers use Apple’s Artificial Intelligence models on-device (which saves on data and cloud computing costs).

My impression from media coverage of WWDC is that Apple is somewhat successful at building consumer GenAI applications in consumer verticals (“AI for the rest of us”). However, the firm comes across as struggling to build the infrastructure necessary to release a generalist GenAI tool.

An article about the event in The Wall Street Journal (WSJ) highlighted the pressure Apple is under to deliver on its Apple Intelligence commitments. It noted that Apple is fairly far behind several big tech peers, particularly Microsoft, Meta, and Google.

On some level, Apple is incentivized to share this paper as a defense of all this bad news. It is basically contextualizing all this negative news in the research by claiming “it’s not us at Apple, it’s the how state of the art of the underlying technologies fails to meet the high bar for our products”.

It might be true. However, investors didn’t seem to buy it, as shown below by the general downward trend of the share price over the past week, and past several months.

Looking forward, WSJ also raised the point that OpenAI seems poised to enter the consumer device business, especially in light of its decision to acquire Jony Ive’s startup and hire him. Especially in light of Apple’s situation, we’re likely to find out over the next few years whether a device and software company that essentially bolts on AI to existing consumer technologies can build a better product than an AI company that creates a physical device.

What does it mean…

…for the rest of big tech?

WSJ’s assessment of Apple seems to think that Google, Meta, and Microsoft have better positioning in the AI market. Of course, there’s more to Big Tech than them, but they look like the most directly competitive firms in terms of software offerings.

Another article in WSJ announced this week that Meta is planning to invest more than $10 billion in Scale AI, a data labelling company, and acquire 49% of the firm. Simultaneously, Meta would bring on Scale AI’s CEO as part of a broader AI leadership reshuffle. There’s certainly strategic benefits for Meta, but some portion of this reads like an acquihire.

And a lot of Microsoft’s exposure to AI comes from its complex relationship with OpenAI.

If everybody is having some issues building this tech internally and taking an M&A-driven approach to growth in the space, maybe the point here is really just that Big Tech is just behind startups in this space? Maybe these firms have to either acquire their way to success in the domain, or they’ll be replaced as industry leaders by the next generation of startups?

The paper also suggests that we’re farther away from Artificial General Intelligence (AGI) than we might have thought. I think should affect how big tech companies spend their R&D dollars allocated to AI, in that there should be less of a push to get there at the expense of other GenAI applications that’d serve users more quickly.

We need to understand approaches to GenAI in more depth, and perhaps develop new ones, as we work towards AGI. Big Tech firms might have more money to throw at this, and be able to spend a higher percentage of its time on basic research than a startup, but they are still beholden to their users, buyers, and shareholders. Ultimately, that, and not some vision about impending Kurzweilian futurism, is what should be driving development efforts in big tech GenAI.

…for smaller businesses who make AI products?

This issue affects smaller AI companies deeply as well.

Garry Tan, who runs Y Combinator, interviewed Michael Truell, one of the founders at Anysphere (which makes Cursor, an AI-powered code editor). He posted a clip on X from a broader interview on YouTube indicating that Cursor is also encountering challenges implementing agents with long context.

On some level, this isn’t surprising to me. Context constraints, after all, impact how humans think. Certainly as a software developer, I encountered and watched my colleagues deal with context switching productivity impacts, and had to work hard to keep the context of multiple simultaneous projects top of mind.

As we’ve focused on human-like approaches to AI (such as neural networks), it seems natural to me that at some point, we’d encounter roadblocks which are characteristically similar to how humans behave. We’ve encountered this before in AI hallucinations — some times people make factual errors when they talk, or just make things up.

This has me wondering whether we will figure out a solution fairly quickly, or whether this is a longer-term plateau that we’re going to need to spend some serious time thinking about how to overcome.

I don’t have a prescriptive view on the best path forward here, but I’ll be watching closely to see how the sector’s composition and research focus areas change over the next several months.

…for businesses who use AI products?

I think the key takeaways here are really about:

the types of problems given to AI tools

the anticipated rate of improvement of AI tools

These things are technically separate, but really they’re intertwined. As AIs encounter tasks, they improve — so giving them more problems, as well as more types of problems, should cause AI tools to improve more quickly.

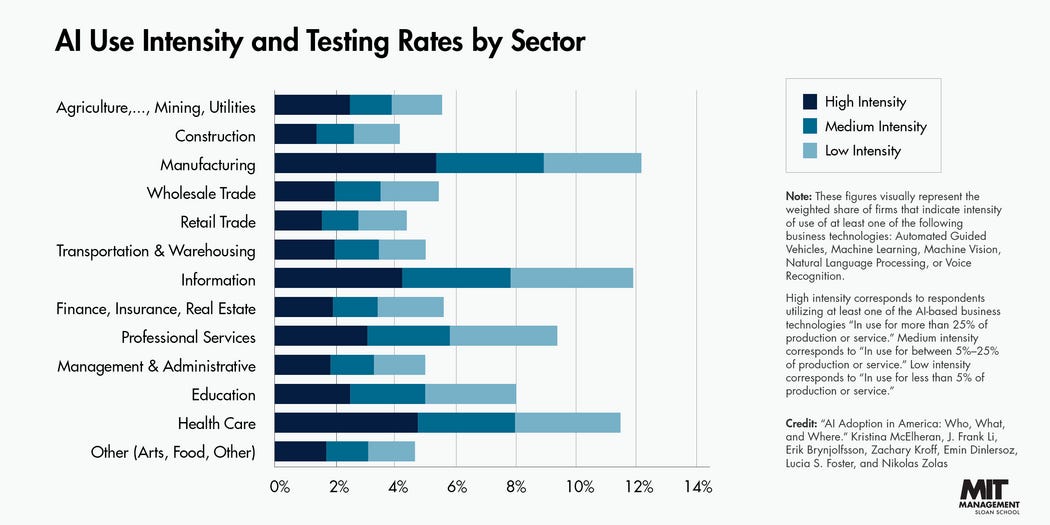

My impression from this MIT Sloan chart below is that B2B AI adoption rates today are highest in sectors of the economy with lots of well structured data.

Manufacturing, information (tech), and healthcare have the most high intensity adoption, as well as the most adoption overall.

Based on this Apple paper, I anticipate that trend strengthening in the near-to-medium term. My view is that AI’s rate of improvement is going to trend downwards (still up, but less steeply) until this context limitation problem is overcome.

The best thing, I think, that business operators can accomplish right now is to get better at structuring their data so the AI tools currently on the market can take advantage of it.

For founders, I think vertically integrating AI model development with data structuring for specific sectors with low AI adoption is going to be a very interesting space.

A final word

Apple’s paper last week publicized something that I’m told AI users have been aware of for a while. Experimentally observing the phenomenon raises key issues that should drive the future of AI research.

But that is not enough.

These open questions should also affect GenAI utilization across firms of all sizes today.

This includes VC funds.